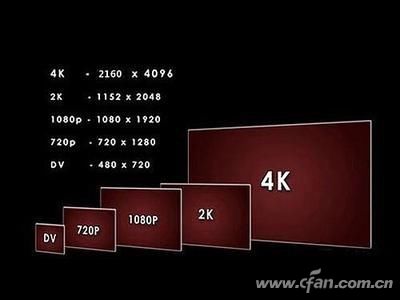

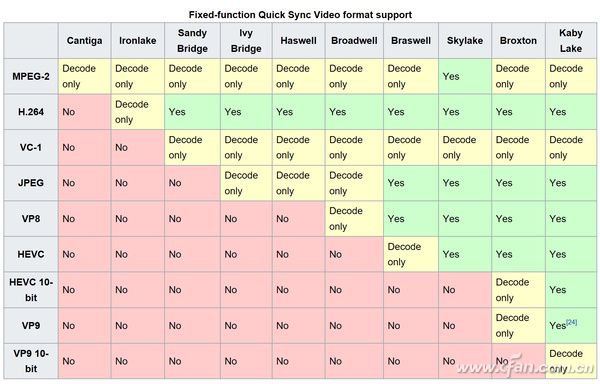

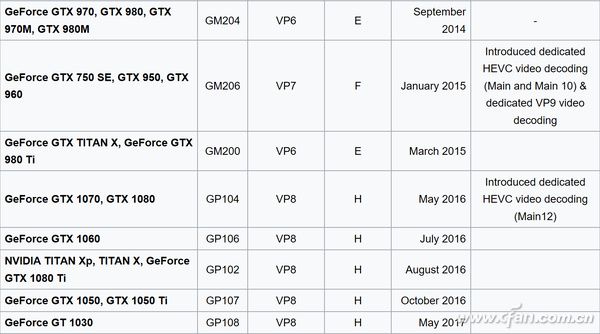

4K technology has finally started to gain momentum, following the widespread adoption of 1080P. This year, the increased availability of 4K movie downloads has given a small boost to 4K’s popularity. On the computer side, monitors are gradually shifting towards 2K and 4K resolutions. CPUs and graphics cards now often advertise support for 4K. After all, computer entertainment mainly revolves around gaming, light media, and video playback. But how much does it really cost to watch 4K movies on a PC? Let’s take a closer look today. The Three Essentials of 4K Decoding, display, and output are the three key components needed for a full 4K experience. Decoding refers to hardware-based video decoding—relying on software decoding via the CPU can significantly increase power consumption and system load. Playback requires a display device that supports 4K resolution (either 3840×2160 or 4096×2160). The output connection is also crucial. For home devices, HDMI 2.0 is the most common standard, while computers typically use DP 1.4 to ensure true 4K@60Hz output capability. Fun fact: 4K Ultra HD usually refers to content with a resolution of 4096×2160. However, many manufacturers prefer to produce 3840×2160 (also known as Quad Full HD), which is more cost-effective and matches the 16:9 aspect ratio commonly used in consumer electronics. What Is a Decoding Solution? To fully enjoy 4K content, your computer must support both decoding and output. The encoding standards for 4K videos include H.265/HEVC and VP9, with 10-bit color depth and HDR support (up to BT.2020 color space). In contrast, 1080P content was mostly encoded using H.264/VC-1 or VP8, with fewer requirements. To handle these advanced codecs, modern GPUs are essential. Newer graphics cards are designed specifically to handle the higher compression ratios of H.265, meaning only the latest models can truly support 4K decoding without performance issues. CPU Support for 4K Intel introduced Quick Sync Video, a hardware-based decoding solution. Starting from the Kaby Lake generation (7th Gen Core), Intel CPUs could decode 4K H.265/HEVC 10-bit video. The 8th Gen Core added support for VP9. However, this feature is only available through integrated graphics. AMD processors, including Ryzen, do not have built-in graphics and thus cannot support hardware decoding unless they are APUs (like the Ryzen APU series). GPU Support for 4K NVIDIA's PureVideo technology supports 4K HEVC 10-bit decoding starting with the VP7 version. This means that only certain GPUs like the Pascal series (GP102, GP104, etc.) can handle it efficiently. Earlier generations, such as the GTX 900 series, had limited support—only the GTX 950 and 960 supported VP7. The GTX 1000 series, on the other hand, offers full support for 4K HEVC 10-bit decoding.

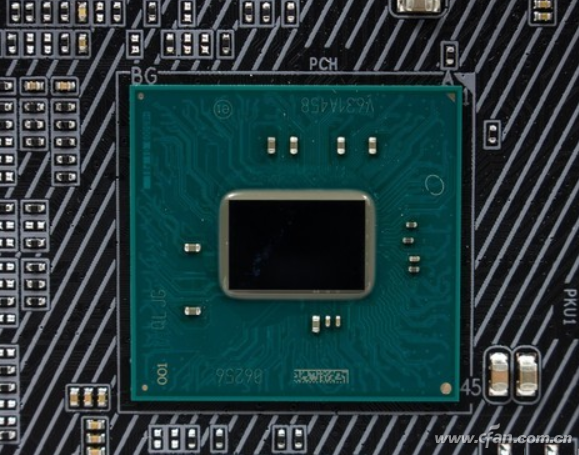

For AMD, UVD 6.3 or higher is required for 4K HEVC 10-bit decoding. Currently, this is supported by the RX 400, RX 500, and VEGA series. Future Ryzen-based APUs are expected to add this support as well. External Interfaces Matter Don’t underestimate the importance of external interfaces when it comes to 4K. Your display must have an HDMI 2.0 or DisplayPort 1.4 interface to support 4K@60Hz and HDR. If your monitor or TV doesn’t meet this standard, you won’t get the full 4K experience. Additionally, transferring large 4K files requires at least a USB 3.0 port, as USB 2.0 is too slow to handle high-resolution video data effectively. As you can see, achieving full 4K capabilities isn’t cheap. It’s not just about spending a lot upfront—it’s about ensuring every component of your setup meets the necessary standards. Whether it’s worth it depends on your personal needs and how much you value the enhanced visual experience. Can Affordable 4K Be Achieved? Yes, there are two options. First, a dedicated 4K media player box, which costs around 300 yuan. However, the quality may vary, and it’s limited to specific use cases. Second, the new Gemini Lake Atom processor, found in mini motherboards, can decode and play 4K content while still functioning as a regular PC. These systems come with HDMI 2.0 and USB 3.0 ports, making them a more accessible option for casual users. Of course, you’ll still need a 4K display to make the most of it. In short, 4K technology delivers stunning visuals, but it comes with a steep price tag. Remember, 1080P took about a decade to become mainstream. While 4K might follow a similar path, the timeline could be shorter—perhaps within 5 years. Until then, it’s all about balancing cost, performance, and personal preference.

Ungrouped,High Quality Ungrouped,Ungrouped Details, CN Shenzhen Waweis Technology Co., Ltd. , https://www.waweis.com